Migrating Files into S3 Buckets

Contents

- Introduction

- The hcs3 Interface

- Creating and Managing Buckets

- Adding and Removing Files from Buckets

- Getting Status and Displaying Bucket Contents

- hcs3 properties

Introduction

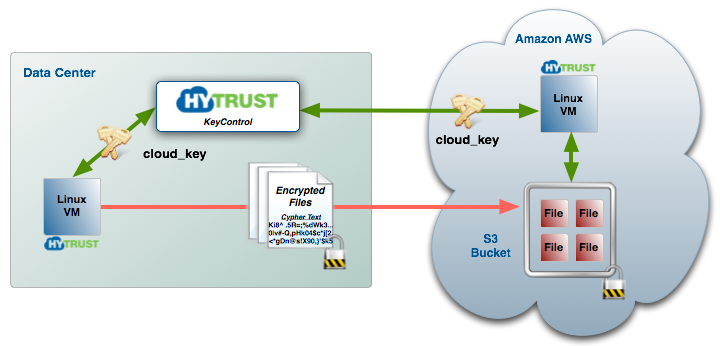

This chapter covers the tools that HyTrust provides to be able to encrypt files, place them within AWS S3 buckets and access the files securely from VMs that reside within the same Cloud VM Set, whether those VMs are running in AWS or running outside of AWS.

Before continuing with this chapter you should become familiar with the keyID interfaces described here which form the basis of sending encrypted data securely between VMs in the same Cloud VM Set.

In simple terms, to access secure S3 buckets, you use the hcs3 command to create an S3 bucket, and then securely add files to the bucket. The files are encrypted before they are copied to S3. VMs within the same Cloud VM Set can then access those files and decrypt them without having to manipulate or manage encryption keys.

To kick things off, let's start with an example. Consider the following figure:

We want to create an S3 bucket, encrypt files and place them in the bucket and then access the files from the VM running in AWS. Here are the sequence of operations that are performed within the VM in the data center:

# hcs3 setstore TKIAN7ZDFBY2BU36DVPQ FZ9gsvIT1oDvuOiJrdSLRqBvmLZPcxzOWT4Qx7y5 # one time operation # hcs3 create spate_aws # hcs3 add spate_aws file1 # hcs3 add spate_aws file2 # hcs3 list spate_aws file1 file2

First we call hcs3 setstore to provide our AWS access key id and secret. This is only called once. Next we create a bucket called spate_aws. Note that this will have the side effect of creating a keyID which is also called spate_aws. Finally, we start adding files to the bucket. As the files are created, we first encrypt them before moving them to the bucket.

From within the VM in AWS, we can simply access the files as follows:

# hcs3 get spate_aws file1 # ls file1

The hcs3 Interface

The hcs3 command has a number of options as follows:

Usage: hcs3[params] cmd: status setstore aws_access_key_id aws_secret_access_key create bucketname delete bucketname set property=value <bucketname | -a> list bucketname add [-k keyid] bucketname filename add bucketname filename rm bucketname filename get bucketname filename [filename] # default should use same filename version -h | -? property: tmp : [pathname, default: /tmp]

To use hcs3, the first thing you need to do is to create an Amazon AWS account and provide your AWS access key id and secret to the hcs3 command. This should be called once using hcs3 setstore command. For example:

# hcs3 setstore TKIAN7ZDFBY2BU36DVPQ FZ9gsvIT1oDvuOiJrdSLRqBvmLZPcxzOWT4Qx7y5

...although you will replace:

- "TKIAN7ZDFBY2BU36DVPQ" with your AWSAccessKeyId, and

- "FZ9gsvIT1oDvuOiJrdSLRqBvmLZPcxzOWT4Qx7y5" with your AWSSecretKey

This call should be made on all VMs that are going to require access to the bucket. Once again note that these VMs must reside within the same Cloud VM Set and this call only needs to be made once.

We store the access/secret keys in /opt/hcs/etc/awsconfig, which is only readable by root.

The rest of the options are fairly self explanatory and involve creating or deleting buckets, listing the contents of buckets, adding or extracting encrypted files from the bucket and removing files.

Creating and Managing Buckets

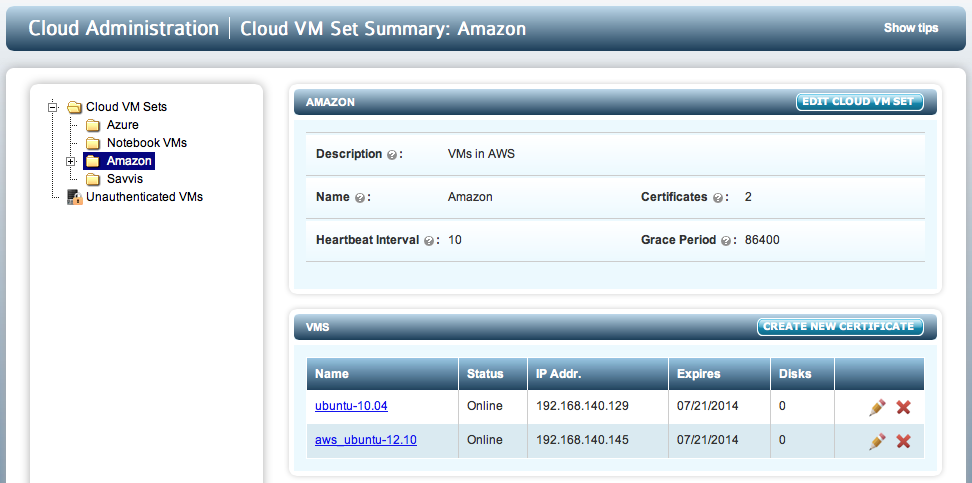

Before uploading encrypted files, the first thing to do is to create a bucket. Consider the following Cloud VM Set:

Let's assume that we want to move encrypted files between these two VMs (in any direction) via S3 buckets. The first thing we must do is to create a bucket. Bucket names have rules as defined by Amazon, which you can find here:

http://docs.aws.amazon.com/AmazonS3/latest/dev/BucketRestrictions.html

Now let's create a bucket called hcs_aws_bucket.

# hcs3 create hcs_aws_bucket

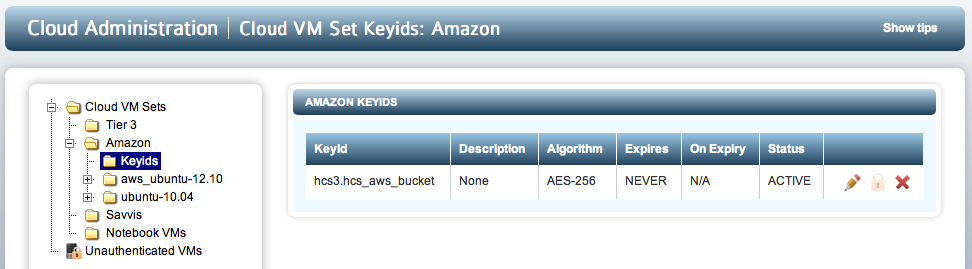

The first thing we do is to create a keyID. You can view this in the webGUI as shown below:

Note that we take the bucket name and add a prefix of hcs3, so that you can distinguish between general keyIDs and keyIDs that are created for S3 usage.

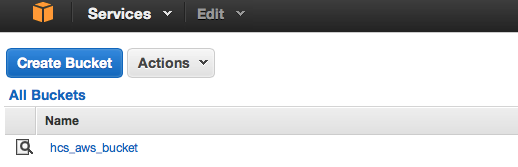

You can also view the bucket by looking through the AWS console:

To remove a bucket, the bucket must first be empty. If not, you will see the following warning:

# hcs3 delete hcs_aws_bucket Error deleting bucket: The bucket you tried to delete is not empty

If the bucket is empty and you request that the bucket be deleted, we delete both the bucket and the keyID, so be warned: if you do use that keyID to encrypt other files, you will no longer be able to decrypt those files. We highly recommend that you do not use S3 keyIDs for any other purpose than with the S3 bucket for which they were created.

Adding and Removing Files from Buckets

Once the bucket and its associated keyID have been created, you can add, extract and delete files to/from the bucket from any VM within the Cloud VM Set that owns the bucket. Let's add some files:

# ls file1 file2 file3 # hcs3 add hcs_aws_bucket file1 # hcs3 add hcs_aws_bucket file2 # hcs3 add hcs_aws_bucket file3 # tar cvfz files.tgz * file1 file2 file3 # hcs3 add hcs_aws_bucket files.tgz

And from either the same VM or another VM we can view which files are in the bucket:

# hcs3 list hcs_aws_bucket file1 file2 file3 files.tgz

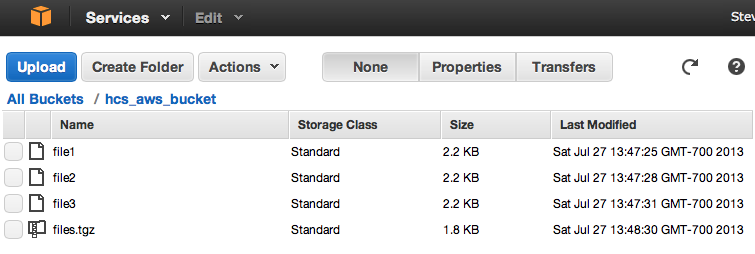

The files are also visible from within the AWS console:

but can only be viewed from within a HyTrust VM.

To pull out a file and decrypt it:

# hcs3 get hcs_aws_bucket file2

and to remove a file:

# hcs3 rm hcs_aws_bucket file3 # hcs3 list hcs_aws_bucket file1 file2 files.tgz

Getting Status and Displaying Bucket Contents

You can get status information about your Amazon ID and the list of buckets available to you as follows:

# hcs3 status Summary -------------------------------------------------------------------------------- Registered Stores -------------------------------------------------------------------------------- Store Name ID Owner -------------------------------------------------------------------------------- Amazon AKIAJ7ZDFBY2BUT6DVPQ spate Buckets -------------------------------------------------------------------------------- hcs_aws_bucket tmp_space

If you want to see which files are in a particular bucket:

# hcs3 list hcs_aws_bucket file1 file2 files.tgz

hcs3 properties

The only property that can be changed is the scratch space use to encrypt a file before moving it to AWS. By default the scratch space is /tmp. This feature is currently unavailable.